pip install apache-airflow-providers-microsoft-azure

Provider package apache-airflow-providers-microsoft-azure for Apache Airflow

Project description

As data professionals, our role is to extract insight, build AI models and present our findings to users through dashboards, API's and reports. Although the development phase is often the most time-consuming part of a project, automating jobs and monitoring them is essential to generate value over time. At element61, we're fond of Azure Data Factory and Airflow for this purpose. FROM apache/airflow:2.0.1 RUN pip install apache-airflow-providers-microsoft-azure1.2.0rc1 when we release 2.0.2 version of Airflow (week-two from now I guess), the released packages will be automatically used (including the 1.2.0 of microsoft-azure) so 2.0.2 should not.

Package apache-airflow-providers-microsoft-azure

Release: 2.0.0

Provider package

This is a provider package for microsoft.azure provider. All classes for this provider packageare in airflow.providers.microsoft.azure python package.

You can find package information and changelog for the providerin the documentation.

Installation

You can install this package on top of an existing airflow 2.* installation viapip install apache-airflow-providers-microsoft-azure

PIP requirements

| PIP package | Version required |

|---|---|

| azure-batch | >=8.0.0 |

| azure-cosmos | >=3.0.1,<4 |

| azure-datalake-store | >=0.0.45 |

| azure-identity | >=1.3.1 |

| azure-keyvault | >=4.1.0 |

| azure-kusto-data | >=0.0.43,<0.1 |

| azure-mgmt-containerinstance | >=1.5.0,<2.0 |

| azure-mgmt-datafactory | >=1.0.0,<2.0 |

| azure-mgmt-datalake-store | >=0.5.0 |

| azure-mgmt-resource | >=2.2.0 |

| azure-storage-blob | >=12.7.0 |

| azure-storage-common | >=2.1.0 |

| azure-storage-file | >=2.1.0 |

Cross provider package dependencies

Those are dependencies that might be needed in order to use all the features of the package.You need to install the specified provider packages in order to use them.

You can install such cross-provider dependencies when installing from PyPI. For example:

| Dependent package | Extra |

|---|---|

| apache-airflow-providers-google | |

| apache-airflow-providers-oracle | oracle |

Release historyRelease notifications | RSS feed

Apache Airflow Azure Kubernetes

2.0.0

2.0.0rc1 pre-release

1.3.0

1.3.0rc1 pre-release

1.2.0

1.2.0rc2 pre-release

1.2.0rc1 pre-release

1.1.0

1.1.0rc1 pre-release

1.0.0

1.0.0rc1 pre-release

1.0.0b2 pre-release

1.0.0b1 pre-release

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size apache_airflow_providers_microsoft_azure-2.0.0-py3-none-any.whl (83.2 kB) | File type Wheel | Python version py3 | Upload date | Hashes |

| Filename, size apache-airflow-providers-microsoft-azure-2.0.0.tar.gz (58.9 kB) | File type Source | Python version None | Upload date | Hashes |

Hashes for apache_airflow_providers_microsoft_azure-2.0.0-py3-none-any.whl

| Algorithm | Hash digest |

|---|---|

| SHA256 | 2c6d3a4317d98a55dcc35b41a10f5bf07524d32fa25f3c5114b0d25d2920a2f3 |

| MD5 | a99ed4e956061f96c676b259d1be0abb |

| BLAKE2-256 | 27771eee981c70ec3f9c899d9042828976d73d247c922767ff363e884a3ef81a |

Hashes for apache-airflow-providers-microsoft-azure-2.0.0.tar.gz

| Algorithm | Hash digest |

|---|---|

| SHA256 | a8a79feba890806ba954feabfc487579e6b5e7c7c60bddcc334c0f95eca037f5 |

| MD5 | 0ceb21a0d7f9454912e55b7f94b97c98 |

| BLAKE2-256 | d130ed8e3e0f247637c71c166c4d7ac24a035903cfd1d3f66906366df742a781 |

Apache Airflow Training

This 1-day GoDataDriven training teaches you the internals, terminology, and best practices of writing DAGs. Plus hands-on experience in writing and maintaining data pipelines.

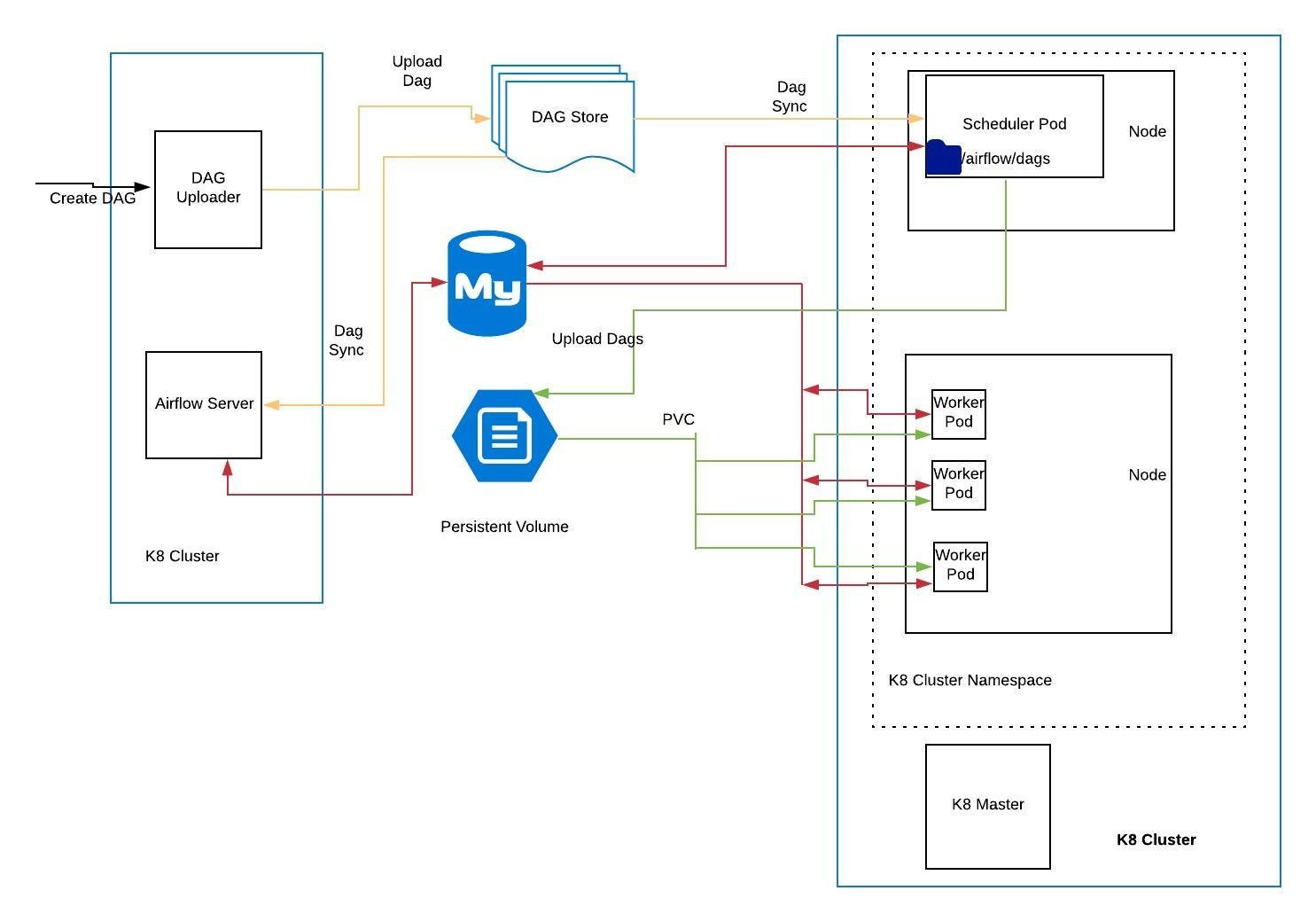

This post will describe how you can deploy Apache Airflow using the Kubernetes executor on Azure Kubernetes Service (AKS). It will also go into detail about registering a proper domain name for airflow running on HTTPS. To get the most out of this post basic knowledge of helm, kubectl and docker is advised as it the commands won't be explained into detail here. In short, Docker is currently the most popular container platform and allows you to isolate and pack self-contained environments. Kubernetes (accessible via the command line tool kubectl) is a powerful and comprehensive platform for orchestrating docker containers. Helm is a layer on top of kubectl and is an application manager for Kubernetes, making it easy to share and install complex applications on Kubernetes.

Getting started

To get started and follow along:

- Clone the Airflow docker image repository

- Clone the Airflow helm chart

Make a copy of ./airflow-helm/airflow.yaml to ./airflow-helm/airflow-local.yaml. We'll be modifying this file throughout this guide.

Kubernetes Executor on Azure Kubernetes Service (AKS)

The kubernetes executor for Airflow runs every single task in a separate pod. It does so by starting a new run of the task using the airflow run command in a new pod. The executor also makes sure the new pod will receive a connection to the database and the location of DAGs and logs.

AKS is a managed Kubernetes service running on the Microsoft Azure cloud. It is assumed the reader has already deployed such a cluster – this is in fact quite easy using the quick start guide. Note: this guide was written using AKS version 1.12.7.

The fernet key in Airflow is designed to communicate secret values from database to executor. If the executor does not have access to the fernet key it cannot decode connections. To make sure this is possible set the following value in airflow-local.yaml:

Use this to generate a fernet key.

Apache Airflow Azure Databricks

This setting will make sure the fernet key gets propagated to the executor pods. This is done by the Kubernetes Executor in Airflow automagically.

Deploying with Helm

In addition to the airflow-helm repository make sure your kubectl is configured to use the correct AKS cluster (if you have more than one). Assuming you have a Kubernetes cluster called aks-airflow you can use the azure CLI or kubectl.

or

respectively. Note that the latter one only works if you've invoked the former command at least once.

Azure Postgres

To make full use of cloud features we'll be connected to a managed Azure Postgres instance. If you don't have one, the quick start guide is your friend. All state for Airflow is stored in the metastore. Choosing this managed database will also take care of backups, which is one less thing to worry about.

Now, the docker image used in the helm chart uses an entrypoint.sh which makes some nasty assumptions:

It creates the AIRFLOWCORESQL_ALCHEMY_CONN value given the postgres host, port, user and password.

The issue is that it expects an unencrypted (no SSL) connection by default. Since this blog post uses an external postgres instance we must use SSL encryption.

The easiest solution to this problem is to modify the Dockerfile and completely remove the ENTRYPOINT and CMD line. This does involve creating your own image and pushing it to your container registry. The Azure Container Registry (ACR) would serve that purpose very well.

We can then proceed to create the user and database for Airflow using psql. The easiest way to do this is to login to the Azure Portal open a cloud shell and connect to the postgres database with your admin user. From here you can create the user, database and access rights for Airflow with

You can then proceed to set the following value (assuming your postgres instance is called posgresdbforairflow) in airflow-local.yaml:

Note the sslmode=require at the end, which tells Airflow to use an encrypted connection to postgres.

Since we use a custom image we have to tell this to helm. Set the following values in airflow-local.yaml:

Note the acr-auth pull secret. You can either create this yourself or – better yet – let helm take care of it. To let helm create the secret for you, set the following values in airflow-local.yaml:

The sqlalchemy connection string is also propagated to the executor pods. Like the fernet key, this is done by the Kubernetes Executor.

Persistent logs and dags with Azure Fileshare

Microsoft Azure provides a way to mount SMB fileshares to any Kubernetes pod. To enable persistent logging we'll be configuring the helm chart to mount an Azure File Share (AFS). Out of scope is setting up logrotate, this is highly recommended since Airflow (especially the scheduler) generates a LOT of logs. In this guide, we'll also be using an AFS for the location of the dags.

Set the following values to enable logging to a fileshare in airflow-local.yaml.

The name of shareName must match an AFS that you've created before deploying the helm chart.

Package apache-airflow-providers-microsoft-azure

Release: 2.0.0

Provider package

This is a provider package for microsoft.azure provider. All classes for this provider packageare in airflow.providers.microsoft.azure python package.

You can find package information and changelog for the providerin the documentation.

Installation

You can install this package on top of an existing airflow 2.* installation viapip install apache-airflow-providers-microsoft-azure

PIP requirements

| PIP package | Version required |

|---|---|

| azure-batch | >=8.0.0 |

| azure-cosmos | >=3.0.1,<4 |

| azure-datalake-store | >=0.0.45 |

| azure-identity | >=1.3.1 |

| azure-keyvault | >=4.1.0 |

| azure-kusto-data | >=0.0.43,<0.1 |

| azure-mgmt-containerinstance | >=1.5.0,<2.0 |

| azure-mgmt-datafactory | >=1.0.0,<2.0 |

| azure-mgmt-datalake-store | >=0.5.0 |

| azure-mgmt-resource | >=2.2.0 |

| azure-storage-blob | >=12.7.0 |

| azure-storage-common | >=2.1.0 |

| azure-storage-file | >=2.1.0 |

Cross provider package dependencies

Those are dependencies that might be needed in order to use all the features of the package.You need to install the specified provider packages in order to use them.

You can install such cross-provider dependencies when installing from PyPI. For example:

| Dependent package | Extra |

|---|---|

| apache-airflow-providers-google | |

| apache-airflow-providers-oracle | oracle |

Release historyRelease notifications | RSS feed

Apache Airflow Azure Kubernetes

2.0.0

2.0.0rc1 pre-release

1.3.0

1.3.0rc1 pre-release

1.2.0

1.2.0rc2 pre-release

1.2.0rc1 pre-release

1.1.0

1.1.0rc1 pre-release

1.0.0

1.0.0rc1 pre-release

1.0.0b2 pre-release

1.0.0b1 pre-release

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size apache_airflow_providers_microsoft_azure-2.0.0-py3-none-any.whl (83.2 kB) | File type Wheel | Python version py3 | Upload date | Hashes |

| Filename, size apache-airflow-providers-microsoft-azure-2.0.0.tar.gz (58.9 kB) | File type Source | Python version None | Upload date | Hashes |

Hashes for apache_airflow_providers_microsoft_azure-2.0.0-py3-none-any.whl

| Algorithm | Hash digest |

|---|---|

| SHA256 | 2c6d3a4317d98a55dcc35b41a10f5bf07524d32fa25f3c5114b0d25d2920a2f3 |

| MD5 | a99ed4e956061f96c676b259d1be0abb |

| BLAKE2-256 | 27771eee981c70ec3f9c899d9042828976d73d247c922767ff363e884a3ef81a |

Hashes for apache-airflow-providers-microsoft-azure-2.0.0.tar.gz

| Algorithm | Hash digest |

|---|---|

| SHA256 | a8a79feba890806ba954feabfc487579e6b5e7c7c60bddcc334c0f95eca037f5 |

| MD5 | 0ceb21a0d7f9454912e55b7f94b97c98 |

| BLAKE2-256 | d130ed8e3e0f247637c71c166c4d7ac24a035903cfd1d3f66906366df742a781 |

Apache Airflow Training

This 1-day GoDataDriven training teaches you the internals, terminology, and best practices of writing DAGs. Plus hands-on experience in writing and maintaining data pipelines.

This post will describe how you can deploy Apache Airflow using the Kubernetes executor on Azure Kubernetes Service (AKS). It will also go into detail about registering a proper domain name for airflow running on HTTPS. To get the most out of this post basic knowledge of helm, kubectl and docker is advised as it the commands won't be explained into detail here. In short, Docker is currently the most popular container platform and allows you to isolate and pack self-contained environments. Kubernetes (accessible via the command line tool kubectl) is a powerful and comprehensive platform for orchestrating docker containers. Helm is a layer on top of kubectl and is an application manager for Kubernetes, making it easy to share and install complex applications on Kubernetes.

Getting started

To get started and follow along:

- Clone the Airflow docker image repository

- Clone the Airflow helm chart

Make a copy of ./airflow-helm/airflow.yaml to ./airflow-helm/airflow-local.yaml. We'll be modifying this file throughout this guide.

Kubernetes Executor on Azure Kubernetes Service (AKS)

The kubernetes executor for Airflow runs every single task in a separate pod. It does so by starting a new run of the task using the airflow run command in a new pod. The executor also makes sure the new pod will receive a connection to the database and the location of DAGs and logs.

AKS is a managed Kubernetes service running on the Microsoft Azure cloud. It is assumed the reader has already deployed such a cluster – this is in fact quite easy using the quick start guide. Note: this guide was written using AKS version 1.12.7.

The fernet key in Airflow is designed to communicate secret values from database to executor. If the executor does not have access to the fernet key it cannot decode connections. To make sure this is possible set the following value in airflow-local.yaml:

Use this to generate a fernet key.

Apache Airflow Azure Databricks

This setting will make sure the fernet key gets propagated to the executor pods. This is done by the Kubernetes Executor in Airflow automagically.

Deploying with Helm

In addition to the airflow-helm repository make sure your kubectl is configured to use the correct AKS cluster (if you have more than one). Assuming you have a Kubernetes cluster called aks-airflow you can use the azure CLI or kubectl.

or

respectively. Note that the latter one only works if you've invoked the former command at least once.

Azure Postgres

To make full use of cloud features we'll be connected to a managed Azure Postgres instance. If you don't have one, the quick start guide is your friend. All state for Airflow is stored in the metastore. Choosing this managed database will also take care of backups, which is one less thing to worry about.

Now, the docker image used in the helm chart uses an entrypoint.sh which makes some nasty assumptions:

It creates the AIRFLOWCORESQL_ALCHEMY_CONN value given the postgres host, port, user and password.

The issue is that it expects an unencrypted (no SSL) connection by default. Since this blog post uses an external postgres instance we must use SSL encryption.

The easiest solution to this problem is to modify the Dockerfile and completely remove the ENTRYPOINT and CMD line. This does involve creating your own image and pushing it to your container registry. The Azure Container Registry (ACR) would serve that purpose very well.

We can then proceed to create the user and database for Airflow using psql. The easiest way to do this is to login to the Azure Portal open a cloud shell and connect to the postgres database with your admin user. From here you can create the user, database and access rights for Airflow with

You can then proceed to set the following value (assuming your postgres instance is called posgresdbforairflow) in airflow-local.yaml:

Note the sslmode=require at the end, which tells Airflow to use an encrypted connection to postgres.

Since we use a custom image we have to tell this to helm. Set the following values in airflow-local.yaml:

Note the acr-auth pull secret. You can either create this yourself or – better yet – let helm take care of it. To let helm create the secret for you, set the following values in airflow-local.yaml:

The sqlalchemy connection string is also propagated to the executor pods. Like the fernet key, this is done by the Kubernetes Executor.

Persistent logs and dags with Azure Fileshare

Microsoft Azure provides a way to mount SMB fileshares to any Kubernetes pod. To enable persistent logging we'll be configuring the helm chart to mount an Azure File Share (AFS). Out of scope is setting up logrotate, this is highly recommended since Airflow (especially the scheduler) generates a LOT of logs. In this guide, we'll also be using an AFS for the location of the dags.

Set the following values to enable logging to a fileshare in airflow-local.yaml.

The name of shareName must match an AFS that you've created before deploying the helm chart.

Now everything for persistent logging and persistent dags has been setup.

This concludes all work with helm and airflow is now ready to be deployed! Run the following command from the path where your airflow-local.yaml is located:

The next step would be to exec -it into the webserver or scheduler pod and creating Airflow users. This is out of scope for this guide.

Learn Spark or Python in just one day

Develop Your Data Science Capabilities. **Online**, instructor-led on 23 or 26 March 2020, 09:00 - 17:00 CET.

FQDN with Ingress controller

Airflow is currently running under it's own service and IP in the cluster. You could go into the web server by port-forward-ing the pod or the service using kubectl. But much nicer is assigning a proper DNS name to airflow and making it reachable over HTTPS. Microsoft Azure has an excellent guide that explains all the steps needed to get this working. Everything below – up to the 'Chaoskube' section – is a summary of that guide.

Deploying an ingress controller

If your AKS cluster is configured without RBAC you can use the following command to deploy the ingress controller.

This will configure a publicly available IP address to an NGINX pod which currently points to nothing. We'll fix that. You can get this IP address become available by watching the services:

Configuring a DNS name

Using the IP address created by the ingress controller you can now register a DNS name in Azure. The following bash commands take care of that:

Make it HTTPS

Now let's make it secure by configuring a certificate manager that will automatically create and renew SSL certificates based on the ingress route. The following bash commands takes care of that:

Next, install letsencrypt to enable signed certificates.

After the script has completed you now have a DNS name pointing to the ingress controller and a signed certificate. The only step remaining to make airflow accessible is configuring the controller to make sure it points to the well hidden airflow web service. Create a new file called ingress-routes.yaml containing

Run

to install it.

Now airflow is accessible over HTTPS on https://yourairflowdnsname.yourazurelocation.cloudapp.azure.com

Product manager content job. Cool!

Chaoskube

As avid Airflow users might have noticed is that the scheduler occasionally has funky behaviour. Meaning that it stops scheduling tasks. A respected – though hacky – solution is to restart the scheduler every now and then. The way to solve this in kubernetes is by simply destroying the scheduler pod. Kubernetes will then automatically boot up a new scheduler pod.

Enter chaoskube. This amazing little tool – which also runs on your cluster – can be configured to kill pods within your cluster. It is highly configurable to target any pod to your liking.

Apache Airflow Azure Synapse

Using the following command you can specify it to only target the airflow scheduler pod.

Concluding

Using a few highly available Azure services and a little effort you've now deployed a scalable Airflow solution on Kubernetes backed by a managed Postgres instance. Airflow also has a fully qualified domain name and is reachable over HTTPS. The kubernetes executor makes Airflow infinitely scalable without having to worry about workers.

Check out our Apache Airflow course, that teaches you the internals, terminology, and best practices of working with Airflow, with

hands-on experience in writing an maintaining data pipelines.